Data centers have always been the digital backbone of modern society, but with the rise of artificial intelligence (AI), machine learning, and other data-intensive technologies, these facilities now face a new level of focus and criticality. Commissioning—already crucial to ensuring that power, cooling, networking, and related systems function reliably—has become more complex than ever. Yet, traditional commissioning management platforms, designed primarily for simpler use cases, often fail to meet the unique demands of high-density, mission-critical data centers. This article explores why data center commissioning stands apart, the traditional commissioning process, and how emerging requirements and advanced tools can better address modern challenges.

The Unique Rigors of Data Center Commissioning

Evolving Technical Demands

Data centers have rapidly advanced from serving standard IT workloads to hosting AI-driven applications requiring massive computational power, specialized hardware (like GPUs), and intricate cooling solutions. Key drivers of this increasing complexity include:

- High-Density Computing: AI and HPC (High-Performance Computing) racks can draw substantially more power and generate more heat than typical enterprise servers.

- Advanced Cooling Systems: Liquid cooling, immersion cooling, and other novel methods are becoming more common, each requiring specialized commissioning checks and protocols.

- Redundancy and Resilience: Data centers must plan for continuous uptime, incorporating multiple layers of failover systems and robust backup power.

- Network Complexity: Modern data centers use spine-leaf topologies or multi-tier networking that requires extensive validation to guarantee speed and reliability under all operating conditions.

- Regulatory and Security Requirements: Stringent standards for data privacy, cybersecurity, and physical security add additional layers of testing and documentation.

These factors elevate the importance of a thorough, methodical, and well-documented commissioning strategy—one that can scale with evolving data center infrastructure.

Traditional Commissioning Management Software Shortcomings

Traditional commissioning platforms often originated from simpler commercial or residential projects. They typically feature:

- Single-Type Checklists and Forms: Many older platforms offer only one checklist template or test form. Data center projects, with diverse systems and multi-phased process, demand multiple types of specialized checklists and flexible forms that categorized, executed, tracked, and reported on separately for successful management.

- Rigid Structures: Systems that rely on predefined, static workflows can hamper the dynamic testing environment needed for data centers and multi-phase testing processes. In these facilities, test sequences can also change based on real-time conditions or findings during earlier test phases.

- Minimal Integration: Legacy tools often lack integrations with modern project management or building information modeling (BIM) platforms (e.g. Autodesk Construction Cloud, Procore, Revit, etc.). Data centers increasingly rely on sophisticated digital twins or 3D models to plan and validate capacities.

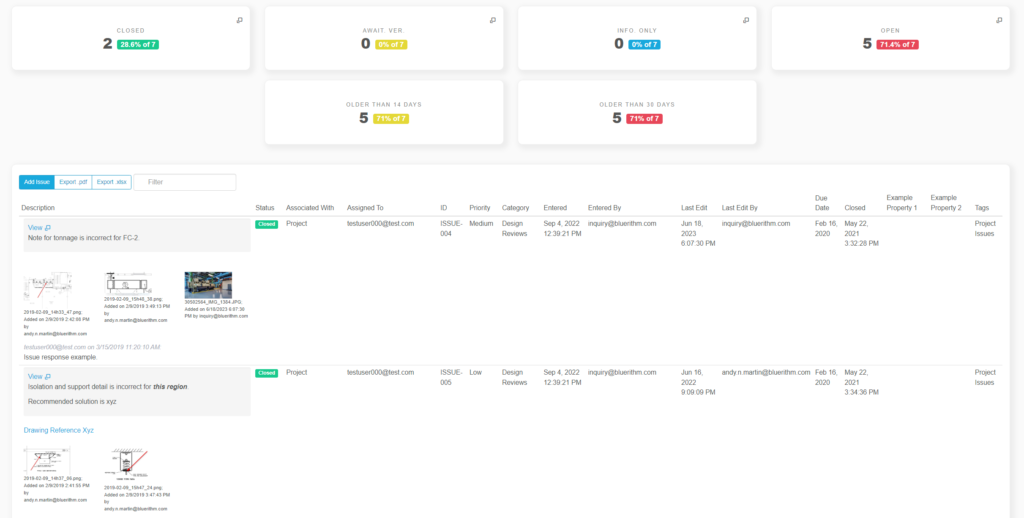

- Limited Reporting & Analytics: Simplistic reporting mechanisms fall short when addressing the complex data sets generated by advanced testing procedures in data centers. Detailed analytics, trend tracking, and immediate insights are critical but frequently unavailable in older platforms.

The Five Levels of Data Center Commissioning

Despite the evolving requirements, data centers still rely on a proven, multi-phase approach to ensure all systems work reliably under various conditions. Each level introduces more complexity, building on the previous phase’s findings.

Level 1 – Factory Witness Testing (Red Tag)

Objective: Confirm that critical components meet performance and quality standards before they arrive on-site.

- Performance Verification: Testing under controlled conditions at the manufacturer’s facility.

- Standards Compliance: Ensuring all specifications align with regulatory and design requirements.

- Quality Inspection: Identifying any defects or inconsistencies early to avoid costly site fixes.

Commonly tested components include UPS systems, power distribution units (PDUs), cooling units, emergency generators, transfer switches, and core network equipment.

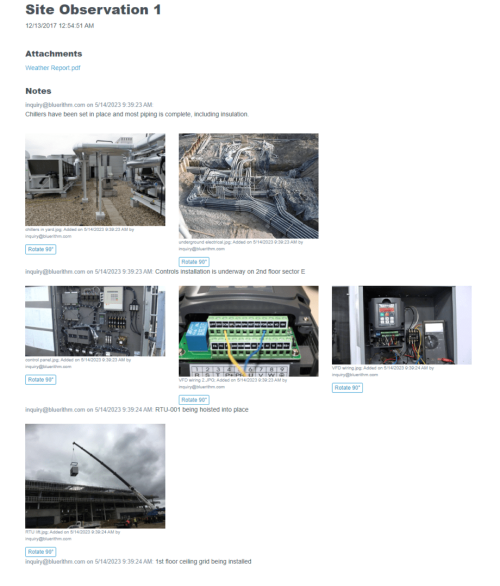

Level 2 – Site Acceptance Inspection (Yellow Tag)

Objective: Validate equipment condition and readiness upon delivery to the data center.

- Shipping Damage Assessment: Checking for issues caused by transport.

- Environmental Checks: Ensuring site conditions match manufacturer’s guidelines for temperature, humidity, and cleanliness.

- Documentation Review: Confirming that installation plans, maintenance records, and warranty details are in place.

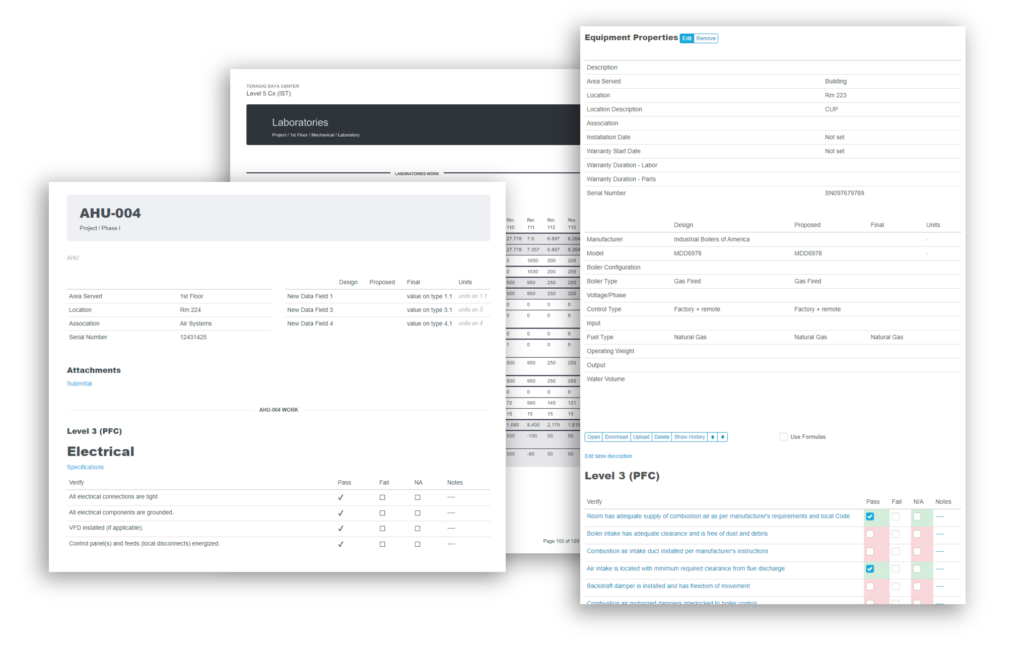

Level 3 – Pre-Functional Testing (Green Tag)

Objective: Verify installation quality and basic operational readiness.

- Installation Compliance: Confirming that the equipment is installed correctly and matches design documents.

- Initial Calibration & Operation: Starting up systems in a controlled manner to verify basic functionality.

- Control System Checks: Ensuring building management and automation systems interface correctly with new equipment.

Level 4 – Functional Performance Testing (Blue Tag)

Objective: Conduct full operational testing under real-world loads and scenarios.

- Load Testing: Running systems near or at design capacity to validate performance and detect weaknesses.

- Alarm & Monitoring Verification: Confirming that all alert conditions function properly and generate the correct notifications.

- Efficiency Metrics: Gathering data on power usage effectiveness (PUE) or other key performance indicators.

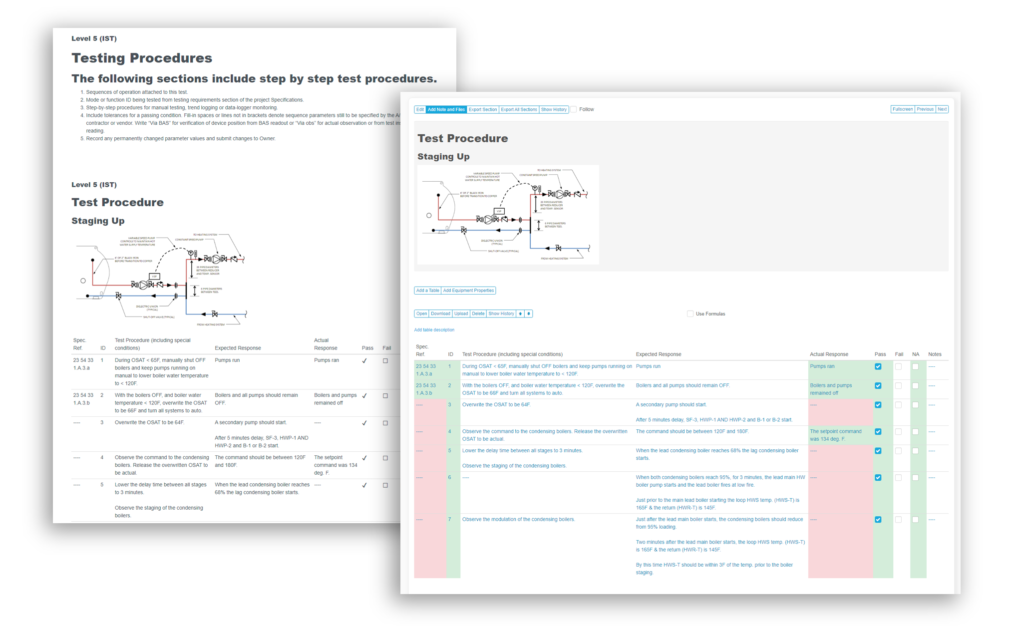

Level 5 – Integrated Systems Testing (White Tag)

Objective: Ensure all systems interact seamlessly in various normal and failure scenarios.

- Failure Mode Testing: Power failure, cooling anomalies, and network outages are simulated to evaluate failover processes.

- Recovery Procedures: Verifying that systems recover automatically and in the correct sequence without human intervention.

- Comprehensive Documentation: Finalizing all test results, operating procedures, and emergency protocols for handover.

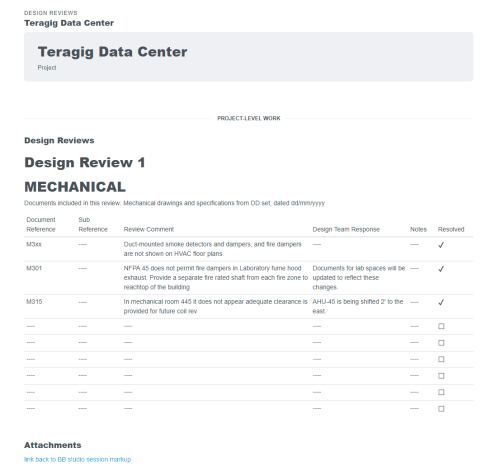

Why Traditional Platforms Fall Short for Data Centers

Given the multi-layered nature of these test phases and the diversity of equipment, commissioners need flexible tools. Traditional platforms often stumble in:

- Checklist Rigidness: Running the same or overly simplistic forms for each level leads to incomplete or inaccurate results, especially when each data center system demands nuanced questions and steps.

- System Complexity: Integrated testing across power, cooling, security, and networking strains older platforms not designed to track and correlate large volumes of test data.

- Real-Time Adjustments: Data center commissioning often requires quick adaptation of test procedures based on real-time conditions, which rigid workflows cannot accommodate.

- Data Handling & Analytics: AI-driven loads produce vast data sets. Traditional systems typically lack the ability to parse, track, and generate actionable insights from this volume of data.

Next-Generation Commissioning Management Software for Data Centers

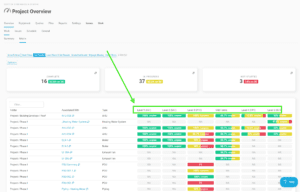

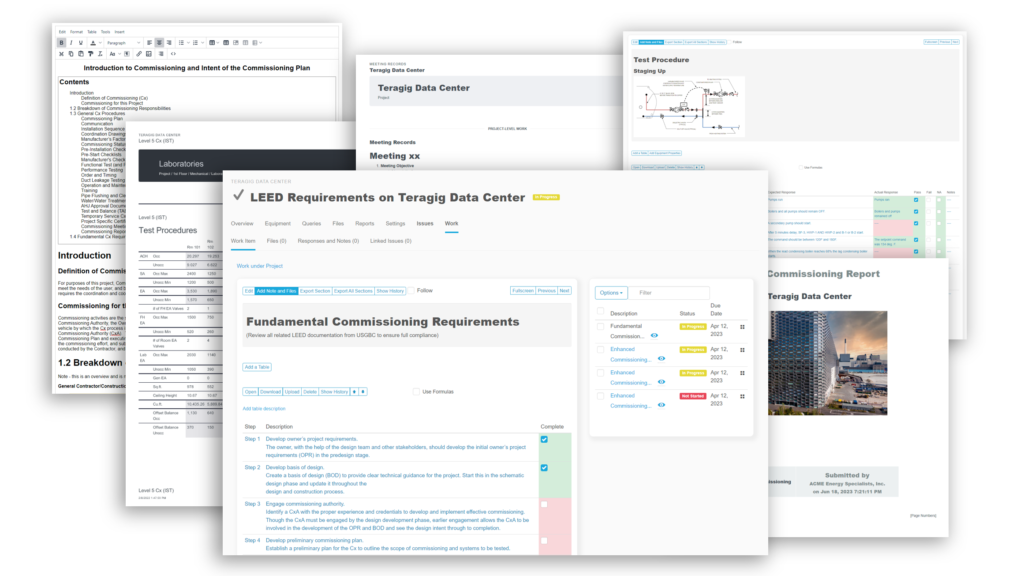

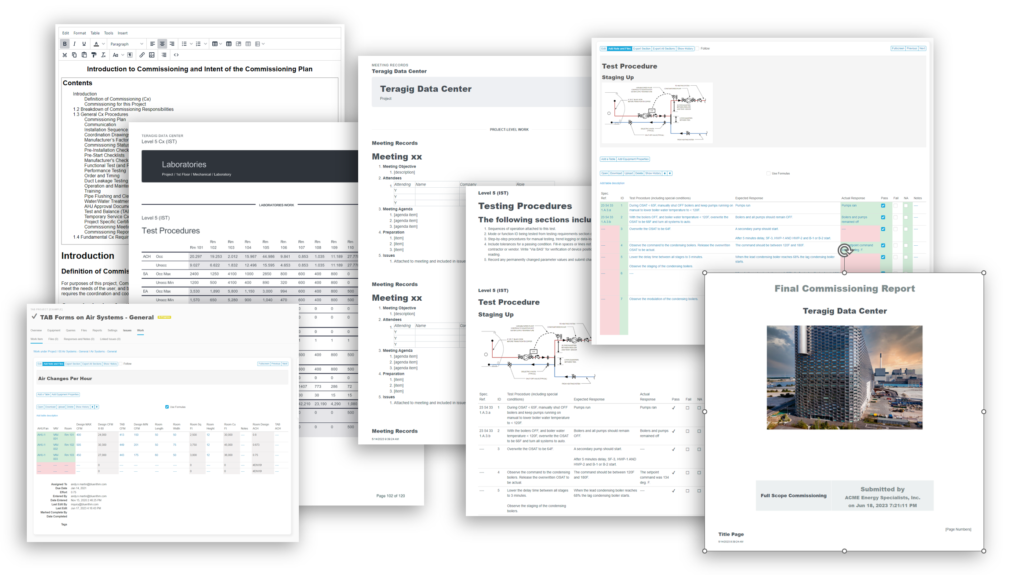

To address these challenges, modern commissioning management solutions like Bluerithm support:

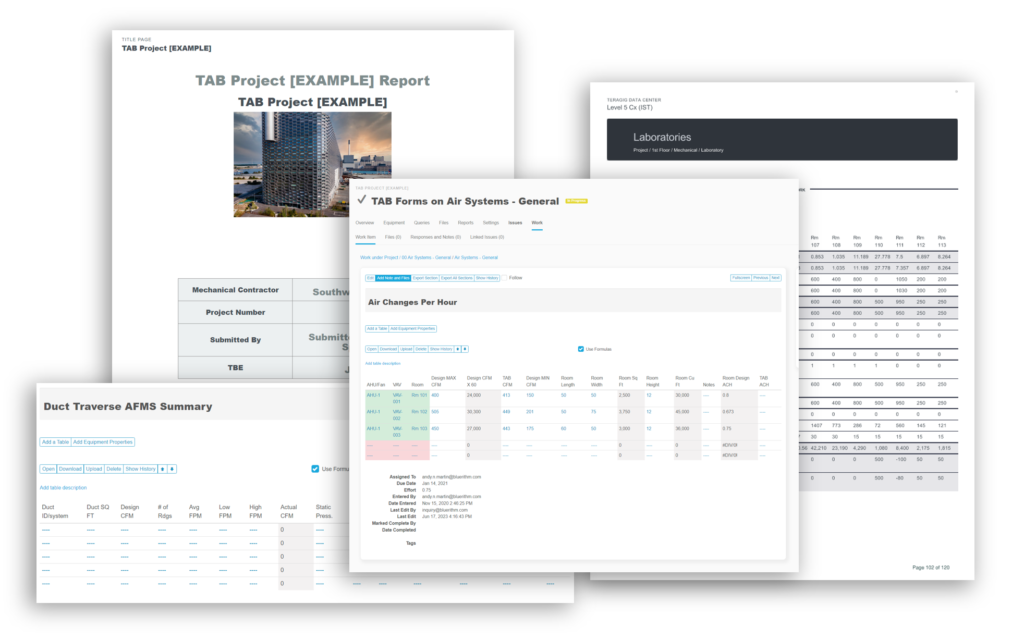

- Multiple Types of Customizable Checklists and Test Forms: The system must be able to easily create, replicate, and report on the forms and process from the 5 levels of commissioning. Systems like advanced power distribution, liquid cooling, and AI server clusters each require unique test processes.

- Flexible, Dynamic Workflows: A system capable of customizable workflows, process, and real-time updates to reflect mid-commissioning changes or newly discovered issues ensures that no detail is overlooked.

- Deep Integrations: Compatibility with BIM software, digital twins, project management platforms (e.g., Autodesk Construction Cloud, Procore), and open APIs enables a holistic approach that ties design data, installation records, and operational analytics together, and also ensures the equipment and commissioning data can be seamlessly transferred to the owner’s long-term facility management systems.

- Advanced Reporting & Analytics: Real-time, customizable, shareable dashboards, granular metrics, automated alerts, and integration with maintenance systems guarantee that all stakeholders see the critical information they need.

Renewed Emphasis in Light of AI

With AI workloads becoming mainstream, the ability to forecast load patterns and proactively adapt testing procedures is pivotal. Advanced commissioning tools can integrate with AI simulation or “digital twin” models to:

- Predict performance under various workload scenarios.

- Map potential points of failure at high load or extended run times.

- Support “what-if” analyses, refining emergency and failover strategies.

Best Practices for Modern Data Center Commissioning

Plan Early and Collaboratively

Engage all stakeholders, including design engineers, contractors, and commissioning agents, during the early design stages. AI-driven workloads may require specialized infrastructure changes that must be accounted for upfront.Document Thoroughly and Dynamically

Maintain up-to-date records of designs, test results, and issue resolutions in a central repository. Ensure that all documentation systems are easy to update and share.Simulate Realistic Loads

Incorporate partial and full load simulations reflective of AI training and HPC operations. Verify how each system handles peak usage and sudden shifts in workload.Integrate Risk Management

Use formal risk assessments to identify high-impact systems or single points of failure, particularly relevant for AI operations that can magnify the consequences of downtime.Adapt Testing Strategies

Remain flexible. Adjust testing sequences or intervals based on real-time insights, new technologies introduced mid-project, or evolving client requirements.

Conclusion

As data centers grow more complex—driven by high-density computing, AI-driven workloads, and strict uptime requirements—the importance of a robust, adaptable commissioning process cannot be overstated. Traditional commissioning management platforms, designed for less demanding and simpler projects, often cannot handle the sheer scope and detail of modern data center commissioning. By embracing next-generation solutions like Bluerithm that offer flexible workflows, multiple form types, multiple phases, real-time analytics, and deep integrations, data center teams can ensure that each phase of commissioning—from factory witness testing to integrated systems evaluation—validates reliability, efficiency, and long-term performance.

In an era defined by data-driven innovation, commissioning is the critical foundation that keeps the world’s most important computational engines running reliably, ensuring that new technologies have the robust infrastructure they need to thrive.